With the release of SQL2016 just around the corner, there are an increasing number of posts appearing about new features that mean I don’t have to work. One post (which motivated me to write this) claimed that this breakthrough would mean we no longer needed to store historical values – an interesting interpretation of a Data Warehouse to say the least. Microsofts own content (here) recommends that there are huge productivity benefits in Slowly Changing Dimensions because you can compare the same key at two points in time.

The idea’s of temporary tables (recording every change to a table in an table_History table) is a cool feature – don’t get me wrong, I think its great. However, there is (sadly) a lacking

amount of discussion about how the feature can be incorporated into the Data Warehouse. Those that blindly follow the sales pitch “I don’t need a data warehouse” because I’ve got history tracking or perhaps “yeah, I’ve got a data warehouse – its a history tracked OLTP” will ensure their users cant get the data they need.

So lets call out some issues with reliance on temporal tables as a data warehouse replacement (and bring some data warehouse assumptions to the surface). I will focus on the star schema design since most references explicit refer to changing dimensions (however we can apply these ideas to other methodologies).

A fundamental construct of the star schema is the surrogate key (almost as fundamental as the concept of dimension and fact). Using the surrogate uniquely identifies an instance of a dimension at a point in time and therefore, state of the dimension can be precisely identified for the fact record. For example, if I sold a product on a date, I need to look up the product dimension and determine which version of the product was applicable on that date. The products surrogate key (not the Product Id or Code) is used in the fact. This is the fundamental design of the star schema. A temporal table does not provide you the capacity to do this – all it can do is provide the data to construct the star.

How could you solve this with temporary tables? Well, there may be the thought that you could concatenate the tables primary key and the records start date for uniqueness and then determine what (dimension) record is applicable to a fact record via a query. Interesting idea but not something I’d take on litely. I suspect that performance would degenerate so quickly both the BI users and the Ops users (remember that this is occurring on the OLTP) would walk away in droves. Remember that this has to occur for every record in the fact – (and yes they are those LONG narrow tables)!

So lets leave it to the presentation tool – pick one, Power BI, SSAS, Tableau, Qlik, Jedox, ….. All these products rely on uniqueness between separated tables so we still require the surrogate to enforce and deliver uniqueness. The star (or at least the principle) is still required.

The real power of the dimension (and to a lesser extent the fact) is that it adds business context that does not exist (or can not be easily calculated). Of course this is my opinion but think about it for a moment. Forget about historic values for a moment – raw information is in the source, if the user wanted that you could give it to them no problem. What the star gives is a modeled perspective of a particular part of the business. Consider a customer dimension – what adds values in analysis? It is often the supplementary data (age group, segment profile, status classification, targeted customer …. ) and all of these things are defined and stored in the dimension. So, if we are going to add value (as data warehousing professionals), we still need the dimension to provide this.

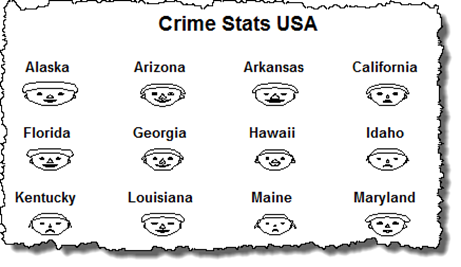

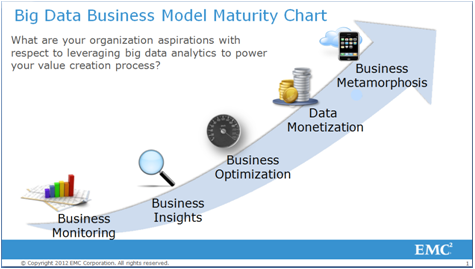

All business applications offer some form of reporting – if you’ve ever seen an information maturity chart, it is the first stage of maturity (see below thanks to an EMC2 slide deck).

Riddle me this then, if the source application (OLTP) provides reporting why do we need a data warehouse? Show reports at a particular point in time? Maybe – (although a lot of users struggle with this and tend to think in current terms). There are a lot of tools that provide adhoc query (OLAP) capabilities so performance the performance of analysis isn’t a real consideration (after all, they could just use an OLAP engine over the OLTP right?).

I think one of the primary reasons is integration. We want to either integrate data from different systems (creating an enterprise view) or we want to supplement current data with with other, richer information (which is really just integration anyway isn’t it). We could even say that business rules and derived information falls into this category.

Here also temporal tables do not negate the need for the data warehouse. The data warehouse is responsible for delivering a consistent, conformed, business verified data that incorporates information from various sources. Nothing changed there (and still the need for a data warehouse).

Finally, lets consider the dimension. That subject orientated view of an object. Its the Product table that tells me everything I need to know about a Product – its category, groupings, margin positions and alike. The dimension is notorious for redundancy and de-normalisation but that’s the price we are prepared to pay for

delivering a single concise view to a user because it breaks down the complexity of the model for the user (they don’t have to combine products to product categories in a query). The idea that we have de-normalise breaks the basic OLTP conventions which force normalisation (after all, we expect 3rd normal form).

The data warehouse is designed to do this work for us and present the view to the user. Essentially, its just another integration problem but one that’s handled (and hidden) by the data warehouse. Our BI tools (again with the presentation layers) may be able to create these consolidations for us however we are still presented with the issue of uniqueness in related table records (that is, we have to identify which category related to a product at a point in time and the BI tools will not do that for us).

So, are temporal tools a replacement for a data warehouse? I think not, sure they may be able to assist with record change tracking (we haven’t discussed the shift in OLTP resource management). Temporary tables are only a tool and I’d be very careful of anyone that claims they could replace a data warehouse.