In previous posts we looked at using Biml to extract data from our database tables to flat files and raw files. While these destinations can be handy, the majority of extracts that I use are to staging tables in a relational engine. This post looks at the Biml required to send our data to ODBC destinations.

The Environment

For the purpose of demonstration, I will use the DimCustomer table from adventure works. There’s nothing too complicated about that table, all I want to do is extract some data from it so we’ll just use a simple query (with code, first name and last name);

select CustomerAlternateKey , FirstName , LastName from dbo.DimCustomer;

For the destination, we’ll look at two options. Firstly we will rely on an auto mapping feature (so that we do not specify column mapping between the source and destination). Secondly, we will override that feature and specify column to column mapping. For this I’ll create two tables in a staging database called customer_automapping (with the same field names as the source) and customer_manualmapping (with different names). The SQL for these tables is given below;

create table dbo.customer_automapping ( CustomerAlternateKey nvarchar(25) , FirstName nvarchar(50) , LastName nvarchar(50) ); create table dbo.customer_manual ( customer_cd nvarchar(25) , first_name nvarchar(50) , last_name nvarchar(50) );

Proforma Template

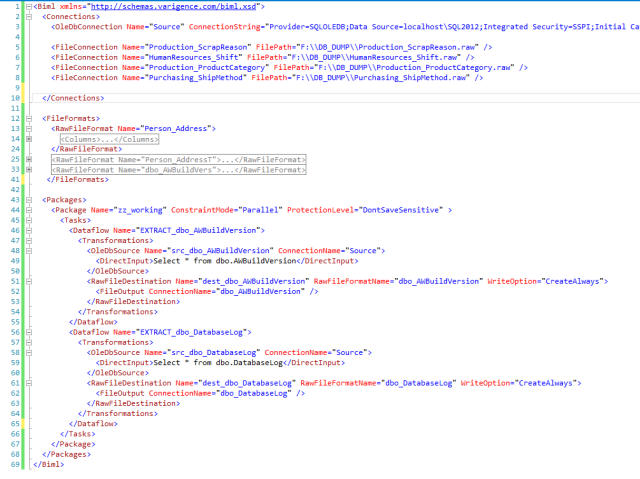

We’ll use Biml to create two parallel dataflows in a package (the package will be called copy_customer) and the data flows will be called customer_automapping and customer_manualmapping. Our template package looks like the one below;

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

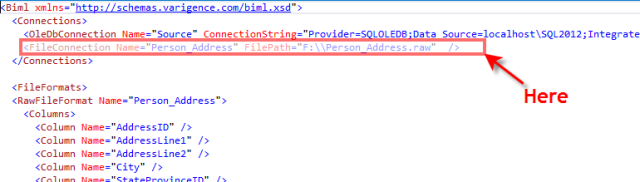

<Connections>

<OdbcConnection Name="dest_stage" ConnectionString="Driver={SQL Server Native Client 11.0};...." />

<OdbcConnection Name="src_adventure_works" ConnectionString="Driver={SQL Server Native Client 11.0};..." />

</Connections>

<Packages>

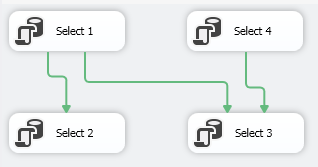

<Package Name="copy_customer" ConstraintMode="Parallel">

<Tasks>

<Dataflow Name="customer_manualmapping">

<Transformations>

</Transformations>

</Dataflow>

<Dataflow Name="customer_automapping">

<Transformations>

</Transformations>

</Dataflow>

</Tasks>

</Package>

</Packages>

</Biml>

Note we have already define placeholders for each dataflow, all we have to do is populate those (transformation) nodes. We also define our source and destination connections.

The ODBC Source

We have previously addressed an ODBC source so for simplicity will just add the code. This needs to be added to each dataflow transformation node;

<OdbcSource Name="src_adventure_works" Connection="src_adventure_works"> <DirectInput> select CustomerAlternateKey, FirstName, LastName from dbo.DimCustomer; </DirectInput> </OdbcSource>

The ODBC Destination

We’d expect the ODBC destination to require a name (since every item requires one), connection and a table (name). These are the only requirements for an automapping feature (where the source fields are automatically mapped to the destination fields on the basis of name). The snippet for the automapping dataflow is therefore;

<Dataflow Name="customer_automapping"> <Transformations> <OdbcSource Name="src_adventure_works" Connection="src_adventure_works"> <DirectInput> select CustomerAlternateKey, FirstName, LastName from dbo.DimCustomer; </DirectInput> </OdbcSource> <OdbcDestination Name="dest_customer" Connection="dest_stage" > <ExternalTableOutput Table="[dbo].[customer_automapping]" /> </OdbcDestination> </Transformations> </Dataflow>

Note that the table is defined by the ExternalTableOutput tag. There is no need to fully qualify the table as I have done (by inclusion of the schema) and <ExternalTableOutput

Table=“customer_automapping“ />

would suffice provided the default schema for the connection was the same as the table. As expected, our dataflow creates the source and destination components and automatically maps fields from the source to the destination based on name.

To explicitly set the source to target mappings, we need to include a columns (and column(s)) tag as a child node of the destination. The Biml for this is below but it is worth identifying that mapping will only be overwritten if it exists in this tag. For example, if two columns have the same name and there is no mapping column specification, the two fields will be auto matched. Therefore, to fully specify the mapping in the ODBC destination we would use the following snippet;

<OdbcDestination Name="dest_customer" Connection="dest_stage" > <ExternalTableOutput Table="[dbo].[customer]" /> <Columns> <Column SourceColumn="CustomerAlternateKey" TargetColumn="customer_cd" /> <Column SourceColumn="FirstName" TargetColumn="first_name" /> <Column SourceColumn="LastName" TargetColumn="last_name" /> </Columns> </OdbcDestination>

It’s clear to see that the mapping occurs between the SourceColumn and TargetColumn attributes of each column node.

The Complete Biml File

For completeness, we’ll add the complete Biml Code;

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<OdbcConnection Name="dest_stage" ConnectionString="Driver={SQL Server Native Client 11.0};…." />

<OdbcConnection Name="src_adventure_works" ConnectionString="Driver={SQL Server Native Client 11.0};…;" />

</Connections>

<Packages>

<Package Name="copy_customer" ConstraintMode="Parallel">

<Tasks>

<Dataflow Name="customer_manualmapping">

<Transformations>

<OdbcSource Name="src_adventure_works" Connection="src_adventure_works">

<DirectInput>

select CustomerAlternateKey, FirstName, LastName from dbo.DimCustomer;

</DirectInput>

</OdbcSource>

<OdbcDestination Name="dest_customer" Connection="dest_stage" >

<ExternalTableOutput Table="[dbo].[customer]" />

<Columns>

<Column SourceColumn="CustomerAlternateKey" TargetColumn="customer_cd" />

<Column SourceColumn="FirstName" TargetColumn="first_name" />

<Column SourceColumn="LastName" />

</Columns>

</OdbcDestination>

</Transformations>

</Dataflow>

<Dataflow Name="customer_automapping">

<Transformations>

<OdbcSource Name="src_adventure_works" Connection="src_adventure_works">

<DirectInput>

select CustomerAlternateKey, FirstName, LastName from dbo.DimCustomer;

</DirectInput>

</OdbcSource>

<OdbcDestination Name="dest_customer" Connection="dest_stage" >

<ExternalTableOutput Table="[dbo].[customer_automapping]" />

</OdbcDestination>

</Transformations>

</Dataflow>

</Tasks>

</Package>

</Packages>

</Biml>

Overwriting the Automap

There may be a situation where you want to Automap some fields but not others (say for example we did not want to populate the CustomerAlternateKey field). In this situation we can rely on auto-mapping and specify the column as not being used through the IsUsed attribute. For example, we could use;

<OdbcDestination Name="dest_customer" Connection="dest_stage" > <ExternalTableOutput Table="[dbo].[customer_automapping]" /> <Columns> <Column SourceColumn="CustomerAlternateKey" IsUsed="false" /> </Columns> </OdbcDestination>