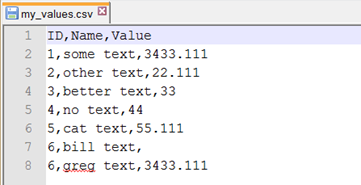

Following on from the last post on dimension processing, the next operation in our SSAS processing strategy is to process measure group partitions. If we remember our scenario, our SSAS database takes too long to process (when we try a full process), so we are forced to process dimensions using a ProcessUpdate and then specific partitions using ProcessData. Of course we only want to process those partitions that have the most recent data and we want to automate this using biml. That is, we want to point our biml package at a SSAS database and have the package generated by some logic.

The Logic

|

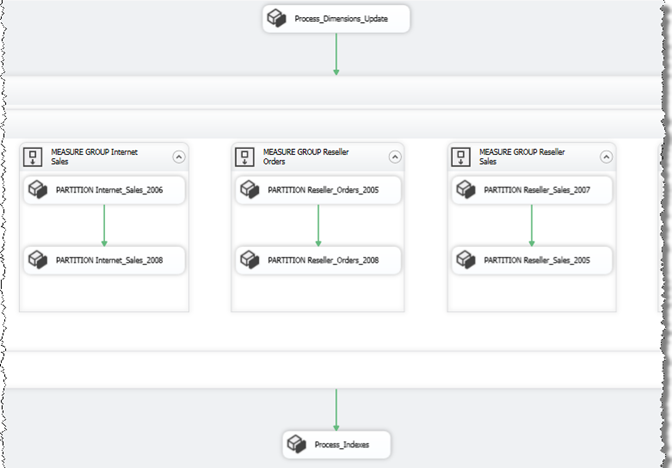

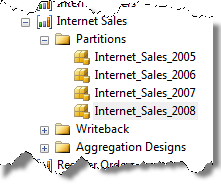

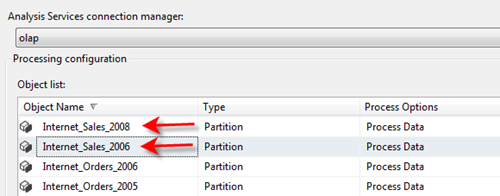

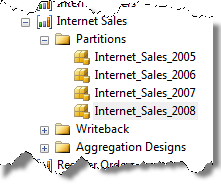

Lets assume that we have a group of partitions in each measure group (see left). We will assume that the last partitions processed for the measure group are the most current and therefore, we should choose the last n number of partitions to process (this is based on the individual partitions last processed date).

If you think about how you would manage this in a system, consider adding 2009 data. When 2009 comes along, we add a partition and process it. Now that this ‘new’ partition has been added, we want to continue processing it along with the (last processed) partition (ie 2008). |

In summary, our processing logic needs to look for the last n partitions based on the last process date of the measure group partitions.

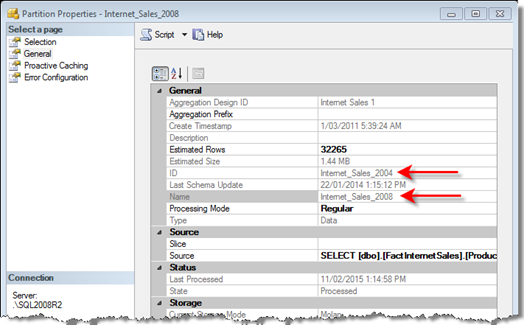

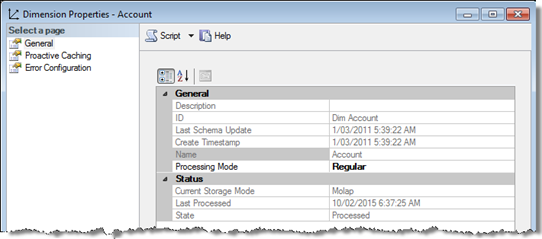

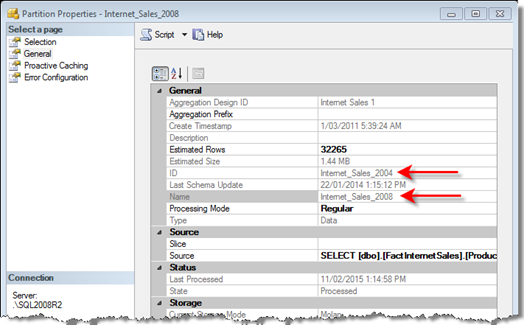

I will explain this in the code below but there is one little (perhaps very confusing) issue that I’ve found with Adventure Works and you may find this in your version. If I look at the properties for the Internet Sales 2008 partition, I can see the date it was last processed, its name and its ID. In my code, I’ll be using ID but take a look at the ID and Name. The ID is Internet_Sales_2004 however, the name is Internet_Sales_2008 … confusing right?

What i need is a way to iterate over the partitions in a SSAS database determining which ones where processed last (say for example the last 2 partitions). I can demonstrate this in the following psuedo code;

server <- connect to SSAS server

database <- connect to a database on server

foreach (cube in database)

{

foreach (measure group in cube)

{

foreach (partition in measure group)

get the last n processed partitions

}

}

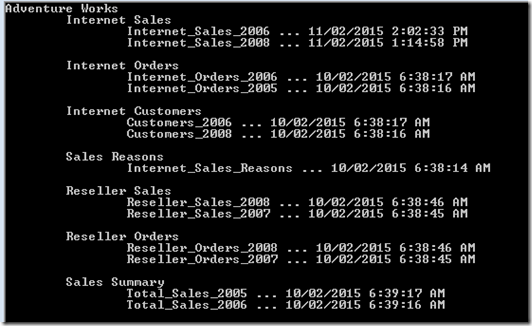

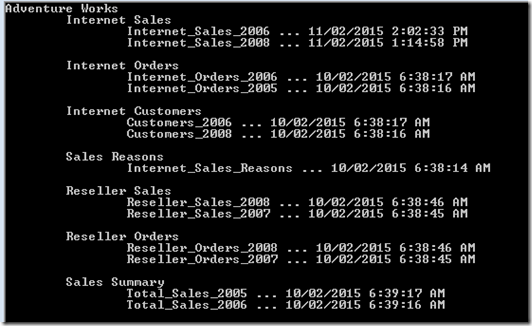

If I run this against my Adventure Works instance, I may get something like this (assuming that I simply print the last 2 partitions). We can see, the Cube, Measure Group and Partitions at individual levels

Here’s the code to produce that output. We can see the iteration of cubes, measure groups and partitions. Note that I’ve also defined a function Partitions to return a sorted table of partitions with the PARTITION_NAME, PARTITION_ID and (last) PROCESS_DT. I’ll have to also include a reference to AMO.

static void Main(string[] args)

{

string _server_name = @"Data Source=.\SQL2008R2";

string _database_name = "Adventure Works DW 2008R2";

int _max_partitions = 2;

Server _ssas_server = new Server();

_ssas_server.Connect(_server_name);

Database _db = _ssas_server.Databases[_database_name];

foreach (Cube _c in _db.Cubes)

{

Console.WriteLine(_c.Name);

foreach (MeasureGroup _mg in _c.MeasureGroups)

{

Console.WriteLine("\t{0}", _mg.Name);

var _listPartitions = Partitions(_ssas_server, _ssas_database, _c.ID, _mg.ID);

for (int _i = 0; _i < _max_partitions & _i < _listPartitions.Count; _i++ )

Console.WriteLine("\t\t{0},{1}", _listPartitions[_i].PARTITION_NAME, _listPartitions[_i].PROCESS_DATE);

Console.WriteLine();

}

}

}

public static List<PartitionStruct> Partitions(string ServerName, string DatabaseName, string CubeId, string MeasureGroupId)

{

var _ret = new List<PartitionStruct>();

Server _ssas_server = new Server();

_ssas_server.Connect(ServerName);

Database _db = _ssas_server.Databases[DatabaseName];

Cube _c = _db.Cubes[CubeId];

MeasureGroup _mg = _c.MeasureGroups[MeasureGroupId];

foreach (Partition _p in _mg.Partitions)

{

_ret.Add(new PartitionStruct(_p.Name.ToString(), _p.ID.ToString(), _p.LastProcessed));

}

_ssas_server.Disconnect();

_ssas_server.Dispose();

_ret.Sort((y, x) => x.PROCESS_DATE.CompareTo(y.PROCESS_DATE));

return _ret;

}

public struct PartitionStruct

{

public readonly string PARTITION_NAME;

public readonly string PARTITION_ID;

public readonly DateTime PROCESS_DATE;

public PartitionStruct(string partition_NAME, string partition_ID, DateTime process_DATE)

{

PARTITION_NAME = partition_NAME;

PARTITION_ID = partition_ID;

PROCESS_DATE = process_DATE;

}

}

Over To Biml

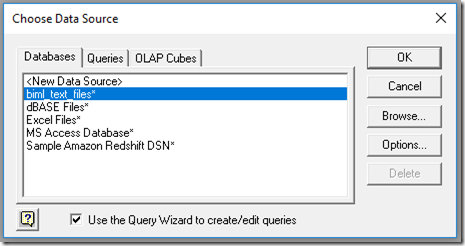

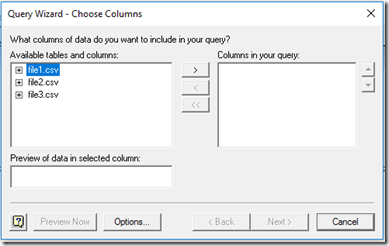

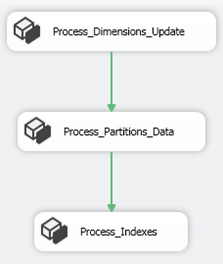

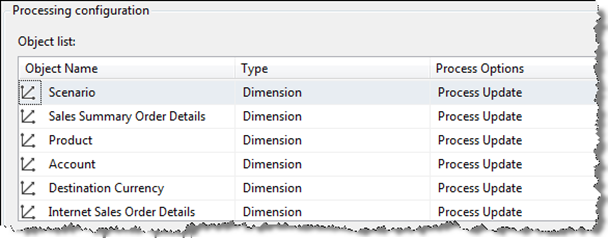

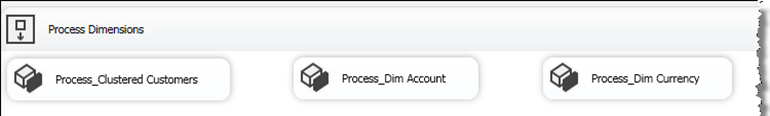

In using biml, I simply want to create a package that has a single PartitionProcessing task in it. Partition processing is just a processing configuration for the AnalysisServicesProcessing task and its use should become apparent once we see the generated biml. We simply specify the partition that we want to process. Our complete biml script is;

<#@ assembly name="C:\Program Files (x86)\Microsoft SQL Server\110\SDK\Assemblies\Microsoft.AnalysisServices.dll" #>

<#@ import namespace="System.Data" #>

<#@ import namespace="Microsoft.AnalysisServices" #>

<#@ template language="C#" #>

<#

String _ssas_server = @".\SQL2008R2";

String _ssas_database = "Adventure Works DW 2008R2";

String _process_type = "ProcessData";

int _max_partitions = 2;

#>

<#+

public static List<PartitionStruct> Partitions(string ServerName, string DatabaseName, string CubeId, string MeasureGroupId)

{

var _ret = new List<PartitionStruct>();

Server _ssas_server = new Server();

_ssas_server.Connect(ServerName);

Database _db = _ssas_server.Databases[DatabaseName];

Cube _c = _db.Cubes[CubeId];

MeasureGroup _mg = _c.MeasureGroups[MeasureGroupId];

foreach (Partition _p in _mg.Partitions)

{

_ret.Add(new PartitionStruct(_p.Name.ToString(), _p.ID.ToString(), _p.LastProcessed));

}

_ssas_server.Disconnect();

_ssas_server.Dispose();

_ret.Sort((y, x) => x.PROCESS_DATE.CompareTo(y.PROCESS_DATE));

return _ret;

}

public struct PartitionStruct

{

public readonly string PARTITION_NAME;

public readonly string PARTITION_ID;

public readonly DateTime PROCESS_DATE;

public PartitionStruct(string partition_NAME, string partition_ID, DateTime process_DATE)

{

PARTITION_NAME = partition_NAME;

PARTITION_ID = partition_ID;

PROCESS_DATE = process_DATE;

}

}

#>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<AnalysisServicesConnection Name="olap" ConnectionString="Data Source=<#= _ssas_server #>;PROVIDER=MSOLAP;Impersonation Level=Impersonate;" Server="<#= _ssas_server #>" />

</Connections>

<Packages>

<Package Name="ProcessSassPartitions" ConstraintMode="Linear">

<Tasks>

<AnalysisServicesProcessing Name="Process Partitions" ConnectionName="olap" >

<ProcessingConfigurations>

<#

Server _ssas_server_ = new Server();

_ssas_server_.Connect(_ssas_server);

Database _db = _ssas_server_.Databases[_ssas_database];

foreach (Cube _c in _db.Cubes)

{

foreach (MeasureGroup _mg in _c.MeasureGroups)

{

List<PartitionStruct> _listPartitions = Partitions(_ssas_server, _ssas_database, _c.ID, _mg.ID);

for (int _i = 0; _i < _max_partitions & _i < _listPartitions.Count; _i++ ) { #>

<PartitionProcessingConfiguration DatabaseId="<#= _db.ID #>" CubeId="<#= _c.ID #>" MeasureGroupId="<#= _mg.ID #>" PartitionId="<#= _listPartitions[_i].PARTITION_ID #>" ProcessingOption="ProcessData" />

<# }

}

} #>

</ProcessingConfigurations>

</AnalysisServicesProcessing>

</Tasks>

</Package>

</Packages>

</Biml>

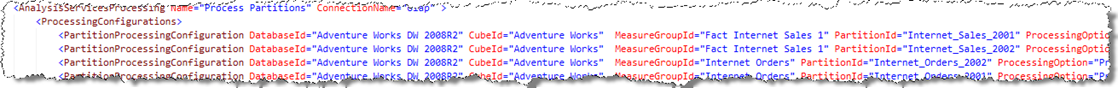

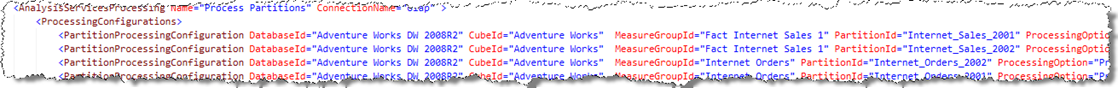

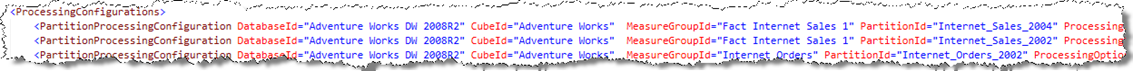

To see how this code generates biml, I want to validate the output, so lets test it. In Adventure Works, the 2005 and 2006 partitions in the Internet Sales measure group. These have the ID’s Internet_Sales_2001 and Internet_Sales_2002 (the measure group ID is Fact Internet Sales 1). Below, I can see the generated biml and verify that the correct partitions have been chosen.

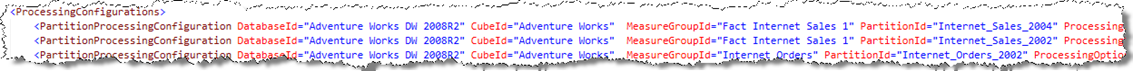

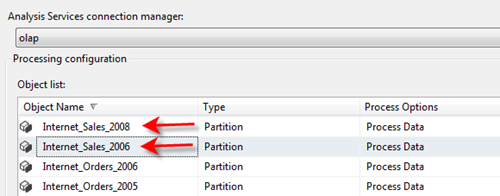

Now, if I’ll process the 2008 partition (through SSMS) which has an ID of Internet_Sales_2004. Assuming I processed the 2006 partition last (in the prior step), I’d expect the 2006 and 2008 partition to be in my processing list. That’s exactly what I see (as in the screen shot below).

Of course my generated package will show the names I expect (Internet_Sales_2008 and Internet_Sales_2006).

Conclusion

So what’s the purpose of this snippet? It provides a way to automate the creation of a SSAS processing task by examining the meta-data of existing database partitions. We assume that we want to continue to process each measure group’s last n partitions and the package is generated for us.